Track Multiple Conversion Goals for A/B Testing |

We’ve made critical improvements to A/B Test reporting in Crazy Egg, making it far easier to compare your results for different Conversion Goals — and to see how your test performance varies over time.

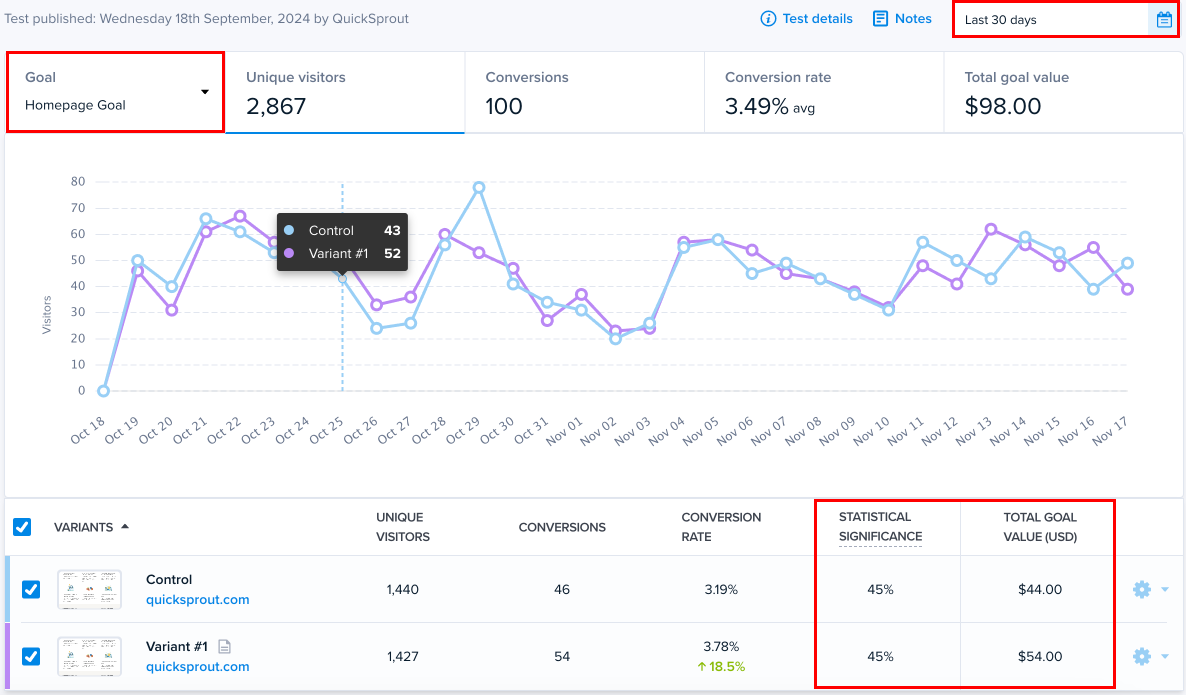

First: the improved A/B Testing dashboard now displays the best-performing page variant for each test, based on your primary Conversion Goal and the statistical significance of your results.

In a glance, you can see how your test is performing so far, and how confident you can be that the outcome isn’t just the result of chance or statistical noise.

Tests on the dashboard can now also be sorted by Created Date, Name, or Number of Visitors, making it easy to find the test results you’re looking for.

Second: we’ve fully redesigned the A/B Testing Results page for each test, so you can easily visualize how your test has performed for any given date range.

These improvements include:

- Track Multiple Conversion Goals — View results for a different Conversion by selecting the Goal menu in the top left corner.

- Date Range Filters — Filter all of your test results by start and end date.

- Time-Series Charts — Visualize how your test metrics have performed by day, for one or multiple Conversion Goals.

- Results Table — See metrics and edit settings for your page variants, all in a single table. You can sort the list of page variants by clicking any of the column titles.

- Note Taking — Keep notes in the same view to help keep track of your work so far. Record Test Notes by clicking the “Notes” button to the left of the date filter. Edit Variant Descriptions by clicking the gear icon for the test variant, then clicking “Edit description”.

Early Access users have begun using this improved reporting to take some of these actions on their A/B Testing initiatives:

- Decide to fully implement a new test variant design, based on whether the test has reached statistically significant results.

- See whether page variants have improved their performance over time, compared to earlier in your test. This is useful if you’ve previously made adjustments to your test settings, want to make adjustments without adding a completely new variant, or see a lot of seasonality in your user behavior.

- See whether page variants have performed better on a different Conversion Goal, compared to the one you originally targeted.

These redesigned reports are available now for all A/B Tests, both new and existing. Happy analyzing!